Technical Problems of Service Robots

Service robots provide more opportunities for developing new fields of robotics whereas requirements and goals need to be revised in both designing and operating solutions. Human-machine interactions and corresponding interfaces is one of the key points for service robots which determine success or failure of complete a task flexibility (Ceccarelli 2011).

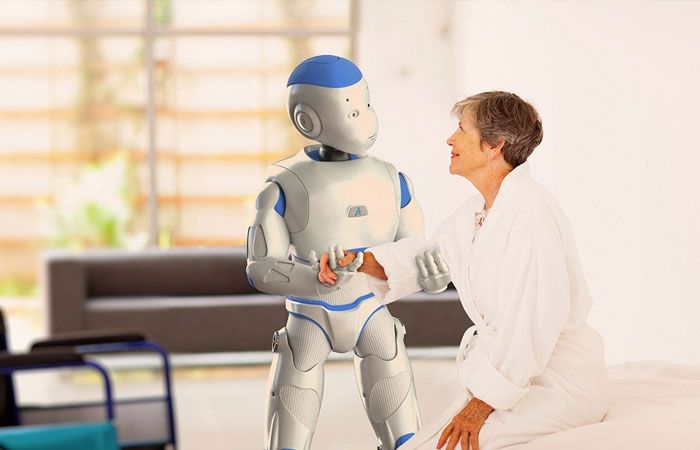

On the one hand, Social Assist Robot (SAR) as an innovation tool that is raised the concern in old-care system. A computer system uses spoken language to interact with users and accomplish the tasks, called Spoken Dialogue System (SDS), which is embedded with AI applications and inserted inside AI robots to understand human-to-human interaction script (Edwards et al. 2018). However, a remote-user-control robot for elder care deeply depends on operator’s spatial perception and interactions, in terms of perception of depth, distance, orientation and configuration of objects. Thus, designing this User Interaction (UI) control system should consider all the problems related with operators as well as the without injuring the elders and damaging the objects when they give a command to the robots (Chivarov & Shivarov 2016).

On the other hand, the primary goal of a well-designed AI robot is to provide service for people without biased. However, algorithms often appear unpredictable deviation and treat someone in an unfair way. Moreover, if misclassified problems occur from commercial computer-vision system, unfair discrimination or contribution can enhance its stereotype. For instance, a facial-recognized system for gender classification from Amazon called Rekognition, which makes 30 percent more mistakes when tests dark-skinned females rather than light-skinned males (Righetti, Madhavan & Chatila 2019). Due to lacking of diversity for training dataset in machine learning, algorithms fairness propose methods should be developed to mitigate the risk of biased.